Lubosz Sarnecki

July 04, 2016

Reading time:

Three years ago in 2013 I released an OpenGL fragment shader you could use with the GstGLShader element to view Side-By-Side stereoscopical video on the Oculus Rift DK1 in GStreamer. It used the headset only as a stereo viewer and didn’t provide any tracking, it was just a quick way to make any use of the DK1 with GStreamer at all. Side-by-side stereoscopic video was becoming very popular, due to “3D” movies and screen gaining popularity. With it’s 1.6 release GStreamer added support for stereoscopic video, I didn’t test Side-By-Side stereo with that though.

Stereoscopic video does not provide the full 3D information, since the perspective is always given for a certain view, or parallax. Mapping the stereo video onto a sphere does not solve this, but at least it stores color information independent of view angle, so its way more immersive and gets described as telepresence experience. A better solution for “real 3D” video would be of course capturing a point cloud with as many as sensitive sensors as possible, filter it and construct mesh data out of it for rendering, but more on that later.

Nowadays mankind projects its imagery mostly onto planes, as seen on most LCD Screens, canvases and Polaroids. Although this seems to be a physical limitation, there are some ways to overcome it, in particular with Curved LCD screens, fancy projector setups rarely seen in art installations and of course most recently: Virtual Reality Head Mounted Displays.

Projecting our images on different shapes than planes in virtual space is not new at all though. Still panoramas have been very commonly projected onto cylinders, not only in modern photo viewer software, but also in monumental paintings like the Racławice Panorama, which is housed inside a cylinder shaped building.

(Source)

But to store information from each angle in 3D space we need a different geometric shape.

Sperical projection is used very commonly for example in Google Street View and of course in VR video.

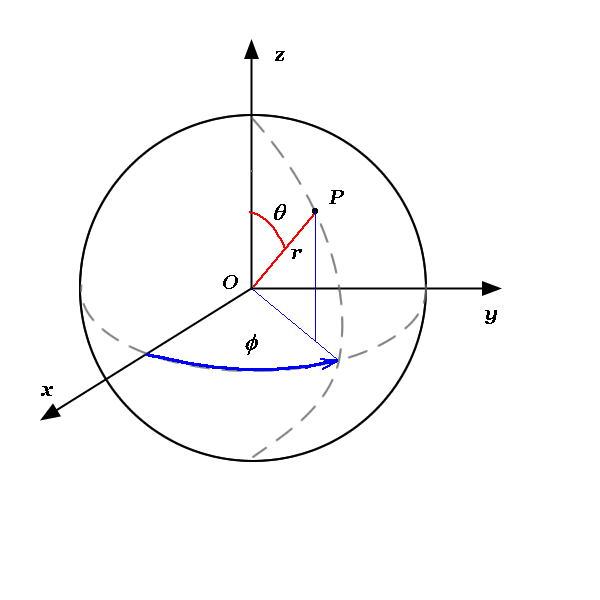

As we are in 3D, a regular angle wouldn’t be enough to describe all directions on sphere, since 360° can only describe a full circle, a 2D shape. In fact we have 2 angles, θ and φ, also called inclination and azimuth.

(Source)

radius r, inclination θ, azimuth φ

You can convert between spherical coordinates and Cartesian coordinates like this

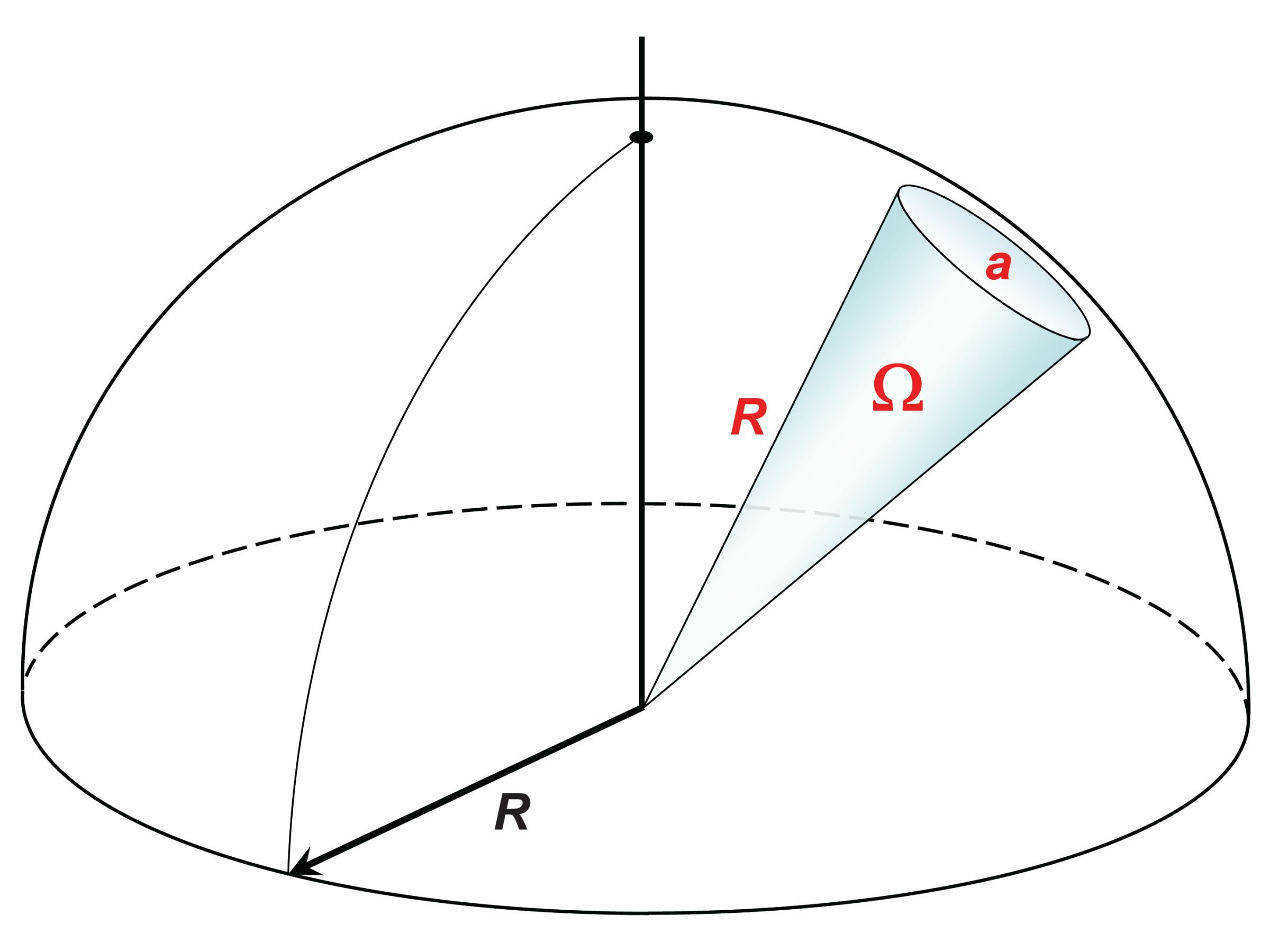

For describing the angle on a sphere we can use the solid angle Ω, calculated by integrating over two angles θ and φ.

The unit for the solid angle is steradian, where the full sphere is 4π sr, hence the hemisphere 2π sr.

(Source)

This is why the term 360° video does not suite to describe spherical video, since there are other popular shapes for projecting video having φ = 360°, like cylinders. 180° video also uses usually a half cylinder. With video half spheres, or hemispheres, you could for example texture a skydome.

In Gst3D, which is a small graphics library currently supporting OpenGL 3+ I am providing a sphere built with one triangle strip, which has an equirectangular mapping of UV coordinates, which you can see in yellow to green. You can switch to the wireframe rendering with Tab in the vrtestsrc element.

$ gst-launch-1.0 vrtestsrc ! glimagesink

For my HoVR demo in 2014 i made Python bindings for using OpenHMD in Blender game engine and had a good experience with it. Although it’s HMD support is very limited, it currently only supports the IMU (Inertial Measurement Unit) of the DK2, it is very lightweight since it uses hidapi directly and requires no daemon. In contrast to that the proprietary Oculus driver OVR is very unstable and unsupported, which I used in my demo HoloChat in 2015.

This is why I decided to use OpenHMD as a minimal approach for initial VR sensor support in GStreamer. For broader headset support, and because I think it will be adapted as a standard, I will implement support for OSVR in the future of gst-plugins-vr.

No problem, you can view spherical videos and photos anyway. Currently you can compile gst-vr without OpenHMD and can view things with an arcball camera, without stereo. So you can still view spherical video projected correctly and navigate with your mouse. This fallback mode would probably be best done during run time.

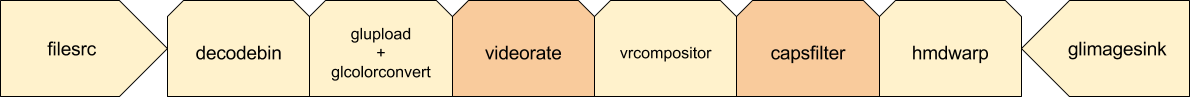

Right, they are the core components required in a VR renderer. Stereo rendering and projection according to IMU sensor happens in vrcompositor, which can also be used without a HMD with mouse controls.

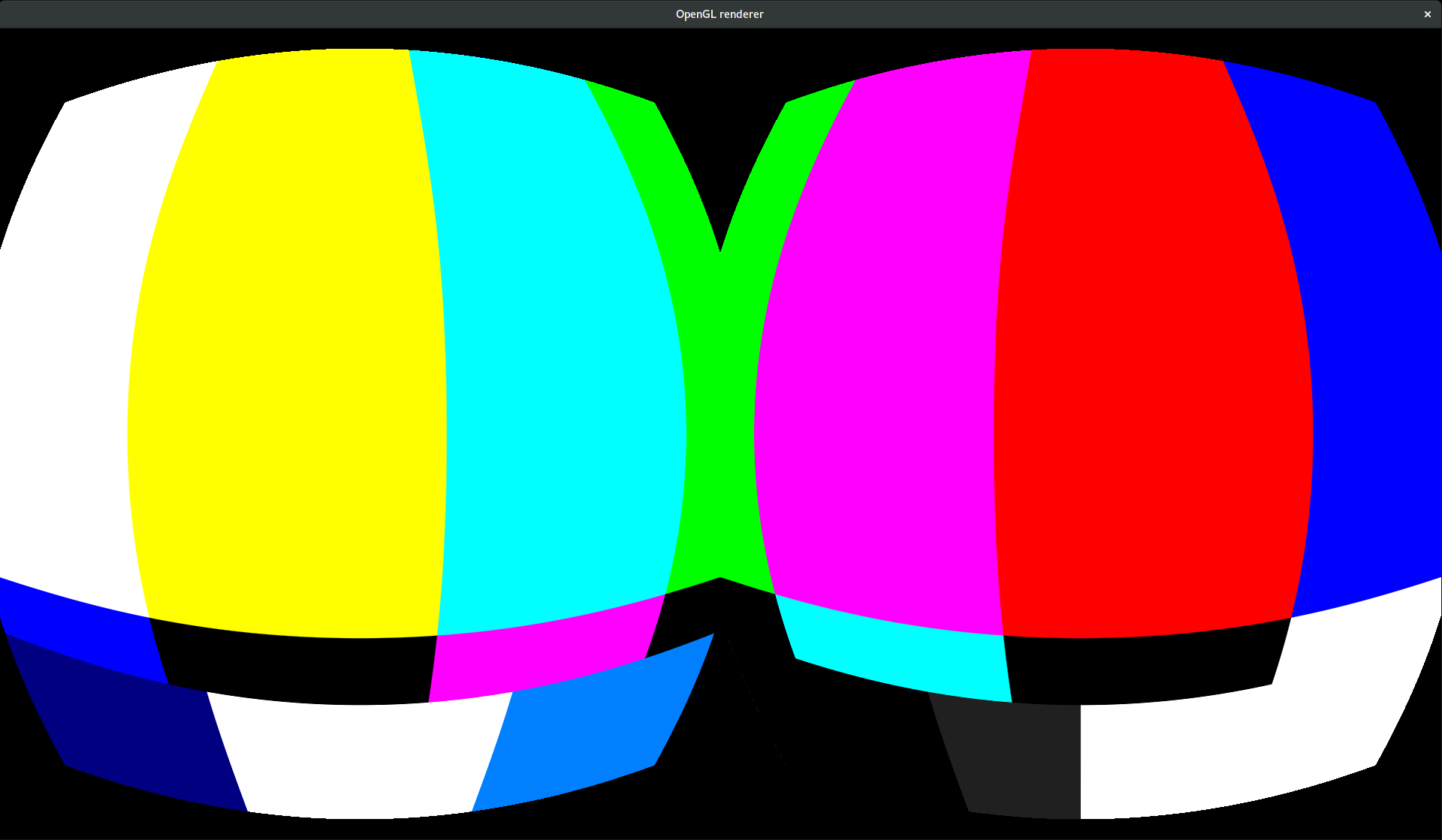

For computing the HMD lens distortion, or barrel distortion, I use a fragment shader based approach. I know that there are better methods for doing this, but this seemed like a simple and quick solution, since it does not really eat up much performance.

Currently the lens attributes are hardcoded for Oculus DK2, but I will soon support more HMDs, in particular the HTC Vive, and everything else that OSVR support could offer.

$ GST_GL_API=opengl gst-launch-1.0 gltestsrc ! hmdwarp ! glimagesink

$ gst-launch-1.0 uridecodebin uri=file:///home/bmonkey/Videos/elephants.mp4 ! \

glupload ! glcolorconvert ! videorate ! vrcompositor ! \

video/x-raw\(memory:GLMemory\), width=1920, height=1080, framerate=75/1 ! \

hmdwarp ! gtkglsink

In this example pipeline I use GtkGLSink which works fine, but only provides a refresh rate of 60Hz, which is not really optimal for VR. This restriction may reside inside Gtk or window management, still need to investigate it, since the restriction also appears using the Gst Overlay API with Gtk.

You can just do an image search of equirectangular and will get plenty of images to view. Using imagefreeze in front of vrcompositor makes this possible. Image support is not implemented in SPHVR yet, but you can just run this pipeline:

$ gst-launch-1.0 uridecodebin uri=http://4.bp.blogspot.com/_4ZFfiaaptaQ/TNHjKAwjK6I/AAAAAAAAE30/IG2SO24XrDU/s1600/new.png ! \

imagefreeze ! glupload ! glcolorconvert ! vrcompositor ! \

video/x-raw\(memory:GLMemory\), width=1920, height=1080, framerate=75/1 ! \

hmdwarp ! glimagesink

In most VR applications a second output window is created to spectate the VR experience on the desktop. In SPHVR I use the tee element for creating 2 GL sinks and put them in 2 Gtk windows via the GStreamer Overlay api, since GtkGLSink still seems to have it’s problems with tee.

$ GST_GL_XINITTHREADS=1 \ gst-launch-1.0 filesrc location=~/video.webm !

decodebin ! videoscale ! glupload ! glcolorconvert ! videorate !

vrcompositor !

video/x-raw\(memory:GLMemory\), width=1920, height=1080, framerate=75/1 !

hmdwarp ! tee name=t ! queue ! glimagesink t. ! queue ! glimagesink

Pronounced sphere, SPHVR is a python video player using gst-plugins-vr. Currently it is capable of opening a url of a equirectangular mapped spherical video.

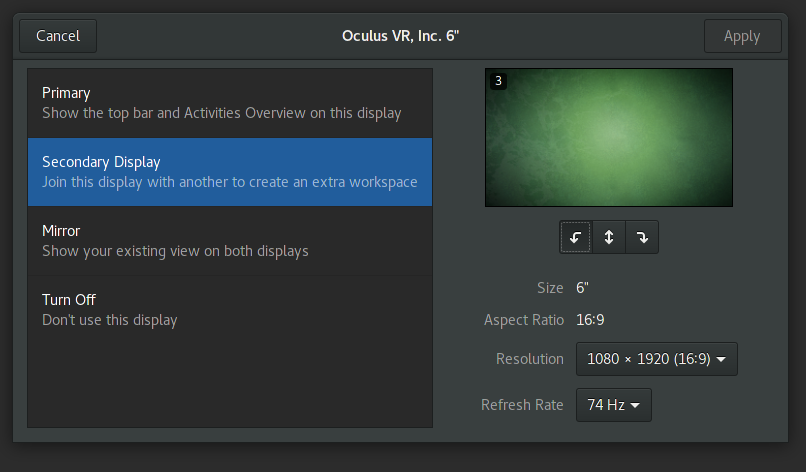

You need to configure your Oculus DK 2 screen to be to be horizontal, since I do not do a roation in SPHVR yet. Other HMDs also may not require this.

SPHVR detects your DK2 display using GnomeDesktop and Gdk if available and opens a full screen VR window on it.

To open a video

$ sphvr file:///home/bmonkey/Videos/elephants.mp4

Spherical video sensors range from consumer devices like the Ricoh Theta for $300 to the professional Nokia Ozo for $60,000. But in general you can just use 2 wide angle cameras and stitch them together correctly. This functionality is mostly found in photography software like Hugin, but will need to find a place in GStreamer soon. GSoC anyone?

The other difficulty besides stitching in creating spherical video is of course stereoscopy. The parallax being different for every pixel and eye makes it difficult to be losslessly transformed from the sensor into the intermediate format and to the viewer. Nokia’s Ozo records stereo with 8 stereo camera pairs in each direction, adjusted to a horizontal default eye separation assumption for the viewer. This means that rotating your head around the axis you are looking along (for example tilting the head to the right) will still produce a wrong parallax.

John Carmack stated in a tweet that his best prerendered stereo VR experience was with renderings from Octane, a renderer from OTOY, who also created the well known Brigate, a path-tracer with real time capabilities. You can find the stereoscopic cube maps on the internet.

So it is apparently possible to encode correct projection in a prerendered stereoscopic cube map, but I still assume that the stereo quality would be highly isotropic. Especially when translating the viewer position.

With stereoscopic spherical video also no real depth information is stored, but we could encode our depth information projected spherically around the viewer if you like, so a spherical video + spherical depth texture constructed from whatever sensory, would be more immersive / correct than having 3D information as a plain stereo image. But this solution would lack the ability to move in the room.

I think we should use should use a better format for storing 3D video.

If you want to walk around the stuff in your video, maybe one could call this holographic, you need a point cloud with absolute world positions. This of course could be converted into a vertex mesh with algorithms like Marching Tetrahedra, compressed and sent over the network.

Real 3D sensors like laser scanners, or other time-of-flight cameras like the Kinect v2 are a good start. You can of course reconstruct 3D positions from a stereoscopic camera, and calculate a point cloud out of it, but this will also result in a point cloud.

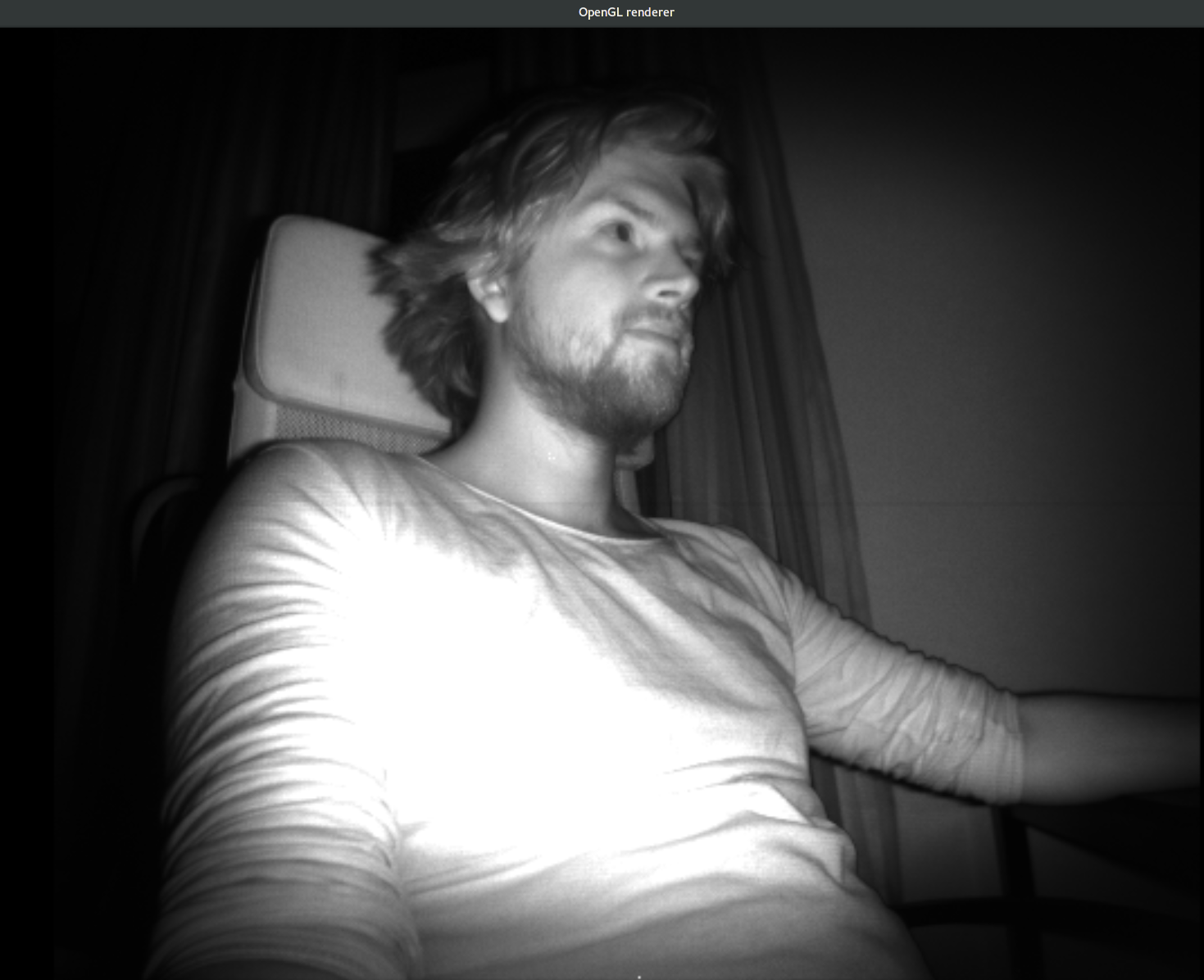

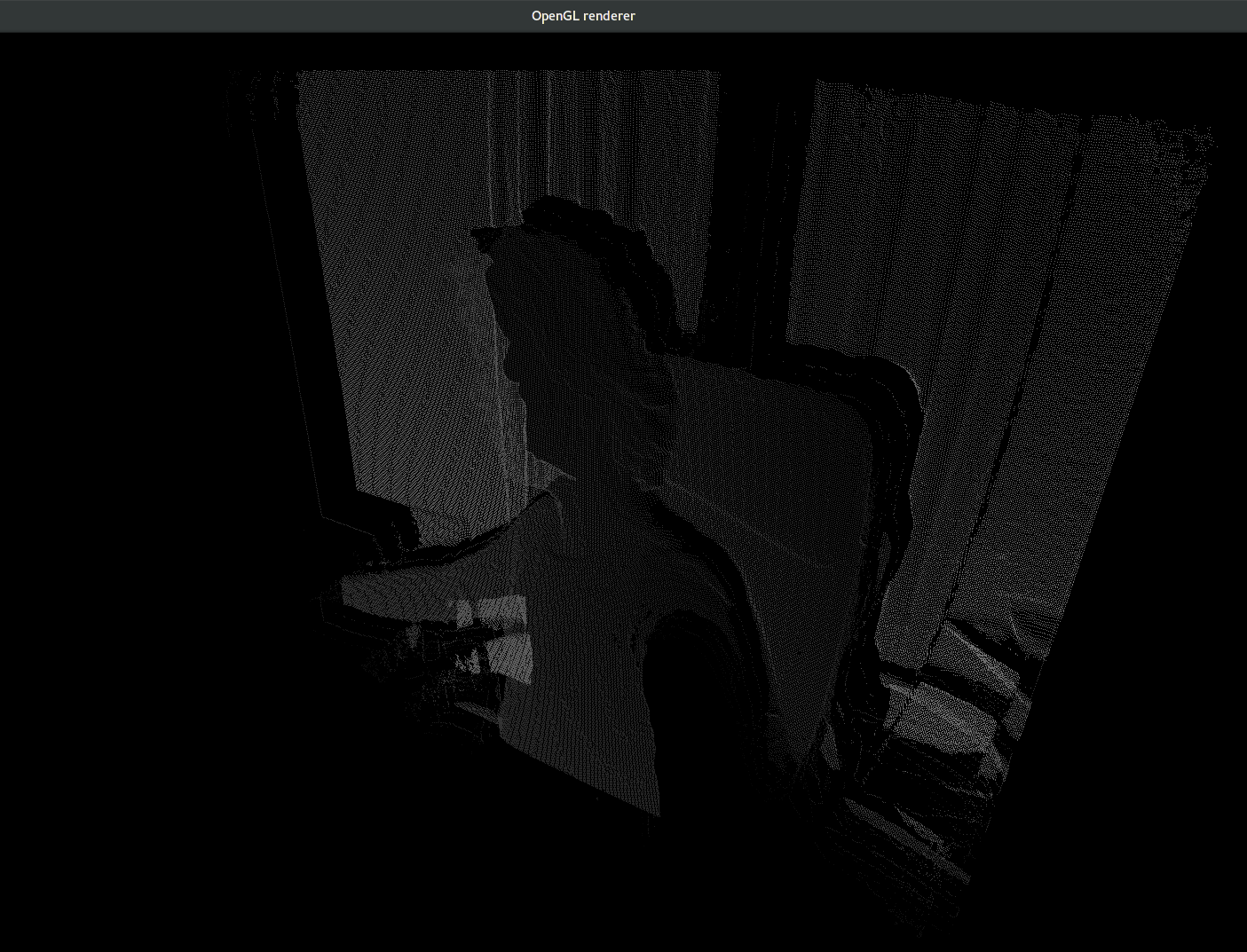

In a previous post I was describing how to stream point clouds over the network with ROS, which I also did in HoloChat. Porting this functionality to GStreamer was always something that teased me, so I implemented a source for libfreenect2. This work is still pretty unfinished, since I need to implement 16bit float buffers in gst-video to transmit the full depth information from libfreenect2. So the point cloud projection is currently wrong, the color buffer is not currently mapped onto it, also no mesh is currently constructed. The code could also get some performance improving attention as well, but here are my first results.

Show Kinectv2 infrared image

$ gst-launch-1.0 freenect2src sourcetype=2 ! glimagesink

or color image

$ gst-launch-1.0 freenect2src sourcetype=1 ! glimagesink

View a point cloud from the Kinect

$ gst-launch-1.0 freenect2src sourcetype=0 ! glupload ! glcolorconvert ! \ pointcloudbuilder ! video/x-raw\(memory:GLMemory\), width=1920, height=1080 ! \ glimagesink

Since the point cloud is also a Gst3D scene, it can be already viewed with a HMD, and since it’s part of GStreamer, it can be transmitted over the network for telepresence, but there is currently no example doing this yet. More to see in the future.

You can find the source of gst-plugins-vr on Github. An Arch Linux package is available on the AUR. In the future I plan distribution via flatpak.

In the future I plan to implement more projections, for example 180° / half cylinder stereo video and stereoscopic equirectangular spherical video. OSVR support, improving point cloud quality and point cloud to mesh construction via marching cubes or similar are also possible things to do. If you are interested to contribute, then feel free to clone.

03/07/2025

As part of the activities Embedded Recipes in Nice, France, Collabora hosted a PipeWire workshop/hackfest, an opportunity for attendees…

25/06/2025

In collaboration with Inria, the French Institute for Research in Computer Science and Automation, Tathagata Roy shares the progress made…

23/06/2025

Last month in Nice, active media developers came together for the annual Linux Media Summit to exchange insights and tackle ongoing challenges…

09/06/2025

In this final article based on Matt Godbolt's talk on making APIs easy to use and hard to misuse, I will discuss locking, an area where…

21/05/2025

In this second article of a three-part series, I look at how Matt Godbolt uses modern C++ features to try to protect against misusing an…

12/05/2025

Powerful video analytics pipelines are easy to make when you're well-equipped. Combining GStreamer and Machine Learning frameworks are the…

Comments (2)

Markus:

Nov 28, 2016 at 06:35 AM

We are looking for a VR streaming application and are wondering if this might work. Our clips are all side by side, 180 Degree Stereographic Videos. Love all the information on your site, Thank you.

Reply to this comment

Reply to this comment

Lubosz Sarnecki:

Nov 28, 2016 at 03:34 PM

Thanks for the feedback! We'd be happy to assist you with your project. Please send us an email at contact@collabora.com with more details on your requirements.

Reply to this comment

Reply to this comment

Add a Comment