Pekka Paalanen

February 16, 2016

Reading time:

How is an uncompressed raster image laid out in computer memory? How is a pixel represented? What are stride and pitch and what do you need them for? How do you address a pixel in memory? How do you describe an image in memory?

I tried to find a web page for dummies explaining all that, and all I could find was this. So, I decided to write it down myself with the things I see as essential.

Wikipedia explains the concept of raster graphics, so let us take that idea as a given. An image, or more precisely, an uncompressed raster image, consists of a rectangular grid of pixels. An image has a width and height measured in pixels, and the total number of pixels in an image is obviouslywidth×height.

A pixel can be addressed with coordinates x,y after you have decided where the origin is and which way the coordinate axes go.

A pixel has a property called color, and it may or may not have opacity (or occupancy). Color is usually described as three numerical values, let us call them "red", "green", and "blue", or R, G, and B. If opacity (or occupancy) exists, it is usually called "alpha" or A. What R, G, B, and A actually mean is irrelevant when looking at how they are stored in memory. The relevant thing is that each of them is encoded with a certain number of bits. Each of R, G, B, and A is called a channel.

When describing how much memory a pixel takes, one can use units of bits or bytes per pixel. Both can be abbreviated as "bpp", so be careful which one it is and favour more explicit names in code. Also bits per channel is used sometimes, and channels can have a different number of bits per pixel each. For example, rgb565 format is 16 bits per pixel, 2 bytes per pixel, 5 bits per R and B channels, and 6 bits per G channel.

Pixels do not come in arbitrary sizes. A pixel is usually 32 or 16 bits, or 8 or even 1 bit. 32 and 16 bit quantities are easy and efficient to process on 32 and 64 bit CPUs. Your usual RGB-image with 8 bits per channel is most likely in memory with 32 bit pixels, the extra 8 bits per pixel are simply unused (often marked with X in pixel format names). True 24 bits per pixel formats are rarely used in memory because trading some memory for simpler and more efficient code or circuitry is almost always a net win in image processing. The term "depth" is often used to describe how many significant bits a pixel uses, to distinguish from how many bits or bytes it occupies in memory. The usual RGB-image therefore has 32 bits per pixel and a depth of 24 bits.

How channels are packed in a pixel is specified by the pixel format. There are dozens of pixel formats. When decoding a pixel format, you first have to understand if it is referring to an array of bytes (particularly used when each channel is 8 bits) or bits in a unit. A 32 bits per pixel format has a unit of 32 bits, that is uint32_t in C parlance, for instance.

The difference between an array of bytes and bits in a unit is the CPU architecture endianess. If you have two pixel formats, one written in array of bytes form and one written in bits in a unit form, and they are equivalent on big-endian architecture, then they will not be equivalent on little-endian architecture. And vice versa. This is important to remember when you are mapping one set of pixel formats to another, between OpenGL and anything else, for instance. Figure 1 shows three different pixel format definitions that produce identical binary data in memory.

It is also possible, though extremely rare, that architecture endianess also affects the order of bits in a byte. Pixman, undoubtedly inheriting it from X11 pixel format definitions, is the only place where I have seen that.

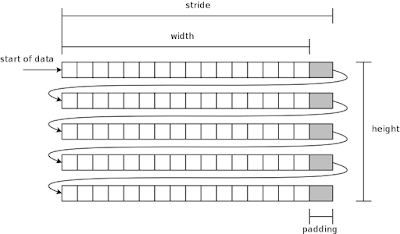

The usual way to store an image in memory is to store its pixels one by one, row by row. The origin of the coordinates is chosen to be the top-left corner, so that the leftmost pixel of the topmost row has coordinates 0,0. First there are all the pixels of the first row, then the second row, and so on, including the last row. A two-dimensional image has been laid out as a one-dimensional array of pixels in memory. This is shown in Figure 2.

|

| Figure 2. The usual layout of pixels of an image in memory. |

There are not only the width×height number of pixels, but each row also has some padding. The padding area is not used for storing anything, it only aligns the length of the row. Having padding requires a new concept: image stride.

Padding is often necessary due to hardware reasons. The more specialized and efficient hardware for pixel manipulation, the more likely it is that it has specific requirements on the row start and length alignment. For example, Pixman and therefore also Cairo (image backend particularly) require that rows are aligned to 4 byte boundaries. This makes it easier to write efficient image manipulations using vectorized or other instructions that may even process multiple pixels at the same time.

Image width is practically always measured in pixels. Stride on the other hand is related to memory addresses and therefore it is often given in bytes. Pitch is another name for the same concept as stride, but can be in different units.

You may have heard rules of thumb that stride is in bytes and pitch is in pixels, or vice versa. Stride and pitch are used interchangeably, so be sure of the conventions used in the code base you might be working on. Do not trust your instinct on bytes vs. pixels here.

How do you compute the memory address of a given pixel x,y? The canonical formula is:

pixel_address = data_begin + y * stride_bytes + x * bytes_per_pixel.

The formula stars with the address of the first pixel in memory data_begin, then skips to row ywhile each row is stride_bytes long, and finally skips to pixel x on that row.

In C code, if we have 32 bit pixels, we can write

uint32_t *p = data_begin;

p += y * stride_bytes / sizeof(uint32_t);

p += x;

Notice, how the type of p affects the computations, counting in units of uint32_t instead of bytes.

Let us assume the pixel format in this example is argb8888 which is defined in bits of a unit form, and we want to extract the R value:

uint32_t v = *p;

uint8_t r = (v >> 16) & 0xff;

Finally, Figure 3 gives a cheat sheet.

|

| Figure 3. How to compute the address of a pixel. |

Now we have covered the essentials, and you can stop reading. The rest is just good to know.

In the above we have assumed that the image origin is the top-left corner, and rows are stored top-most first. The most notable exception to this is the OpenGL API, which defines image data to be in bottom-most row first. (Traditionally also BMP file format does this.)

In the above, we have talked about single-planar formats. That means that there is only a single two-dimensional array of pixels forming an image. Multi-planar formats use two or more two-dimensional arrays for forming an image.

A simple example with an RGB-image would be to store R channel in the first plane (2D-array) and GB channels in the second plane. Pixels on the first plane have only R value, while pixels on the second plane have G and B values. However, this example is not used in practice.

Common and real use cases for multi-planar images are various YUV color formats. Y channel is stored on the first plane, and UV channels are stored on the second plane, for instance. A benefit of this is that e.g. the UV plane can be sub-sampled - its resolution could be only half of the plane with Y, saving some memory.

If you have read about GPUs, you may have heard of tiling or tiled formats (tiled renderer is a different thing). These are special pixel layouts, where an image is not stored row by row but a rectangular block by block. Tiled formats are far too wild and various to explain here, but if you want a taste, take a look at Nouveau's documentation on G80 surface formats.

25/06/2025

In collaboration with Inria, the French Institute for Research in Computer Science and Automation, Tathagata Roy shares the progress made…

23/06/2025

Last month in Nice, active media developers came together for the annual Linux Media Summit to exchange insights and tackle ongoing challenges…

09/06/2025

In this final article based on Matt Godbolt's talk on making APIs easy to use and hard to misuse, I will discuss locking, an area where…

21/05/2025

In this second article of a three-part series, I look at how Matt Godbolt uses modern C++ features to try to protect against misusing an…

12/05/2025

Powerful video analytics pipelines are easy to make when you're well-equipped. Combining GStreamer and Machine Learning frameworks are the…

06/05/2025

Gustavo Noronha helps break down C++ and shows how that knowledge can open up new possibilities with Rust.

Comments (9)

Diego Gonzalez:

Aug 07, 2018 at 10:39 AM

Excellent post, very clear, thanks a lot! I wonder how the alpha channel is stored in memory but I guess this depends on image format and takes as much place as another channel, then is interpreted by the system displaying the picture based on the format? Anyway, thank you again! Diego

Reply to this comment

Reply to this comment

Pekka Paalanen:

Aug 07, 2018 at 11:29 AM

Hi, you are correct. You can see an alpha channel in Figure 1. It is the "a8" there. Storage wise it is no different from R, G or B, and is extracted the same way. This is the most usual way to store an alpha channel, and it is quite rare to find e.g. multi-planar or YUV format images with an alpha channel.

The alpha channel, or alpha values, do have one important catch. The image can have pre-multiplied alpha or not pre-multiplied. The value stored in a pixel for pre-multiplied alpha are actually {R*A, G*A, B*A, A}, while for not pre-multiplied they are {R, G, B, A}. The latter case may be more intuitive for a human as you can see the base colour and opacity separately. However, the former case is favoured inside programs because it saves in computations when alpha-blending.

Reply to this comment

Reply to this comment

Payam:

Dec 13, 2018 at 06:34 AM

Thanks for your detailed explanation.

Reply to this comment

Reply to this comment

Abhishek:

Jan 30, 2019 at 06:48 AM

Thanks for this detailed explanation. It helped a lot.

Reply to this comment

Reply to this comment

learner:

Mar 25, 2020 at 06:52 PM

Hi!

Can you please explain this:

uint8_t r = (v >> 16) & 0xff;

why is it not just:

uint8_t r = (v >> 8);

as I assume the r color are the 8 bits right after the 8 alpha bits in argb8888 format?

Thanks from a beginner.

Reply to this comment

Reply to this comment

learner2:

Mar 26, 2020 at 01:12 AM

I'm sorry for the stupid question a plain short circuit in my brain. I assume it is like this:

v>>16 shifts the 32 bits 16 slots to the right and with 0xff the rightmost 8 bits are selected from the remaining 16 bits of the shifted 32 bits?!

Reply to this comment

Reply to this comment

Pekka Paalanen:

Mar 26, 2020 at 08:17 AM

Thanks for asking.

As mentioned in the article, the pixel format for that example is set to be argb8888 (bits of uint32_t). This means that of a uint32_t, the R bits are bits 23:16 (or 16-23). In that numbering the lowest bit is bit 0. So the shift-right is 16 to move the G and B bits out of the way, to get the R bits as the lowest ones. Then masking with 0xff gets rid of the A bits, leaving only R bits in the value.

Refer to Figure 1. caption for the bit numbering, too. The usual convention when writing down the bits of a word (here uint32_t) is to have the highest bit on the left and the lowest bit on the right. That also matches the C bit-shift operators where e.g. >> shifts all existing bits towards right, making the unsigned value of the word smaller.

Reply to this comment

Reply to this comment

Newbie:

Jun 24, 2022 at 01:22 PM

>> pixel_address = data_begin + y * stride_bytes + x * bytes_per_pixel.

Shouldn't X and Y in above equation interchanged ? We need to skip X rows to access so stride_bytes should be multiplied with number of rows ( i.e. X ) and not number of columns ?

Reply to this comment

Reply to this comment

Pekka Paalanen:

Jun 27, 2022 at 10:21 AM

No. Rows are horizontal, and columns are vertical. X is horizontal coordinate (inside row Y) and Y is vertical coordinate (inside column X). Therefore Y says how many rows we must skip, and X says how many pixels we must skip in addition to that. See Figure 3.

Reply to this comment

Reply to this comment

Add a Comment